distillML

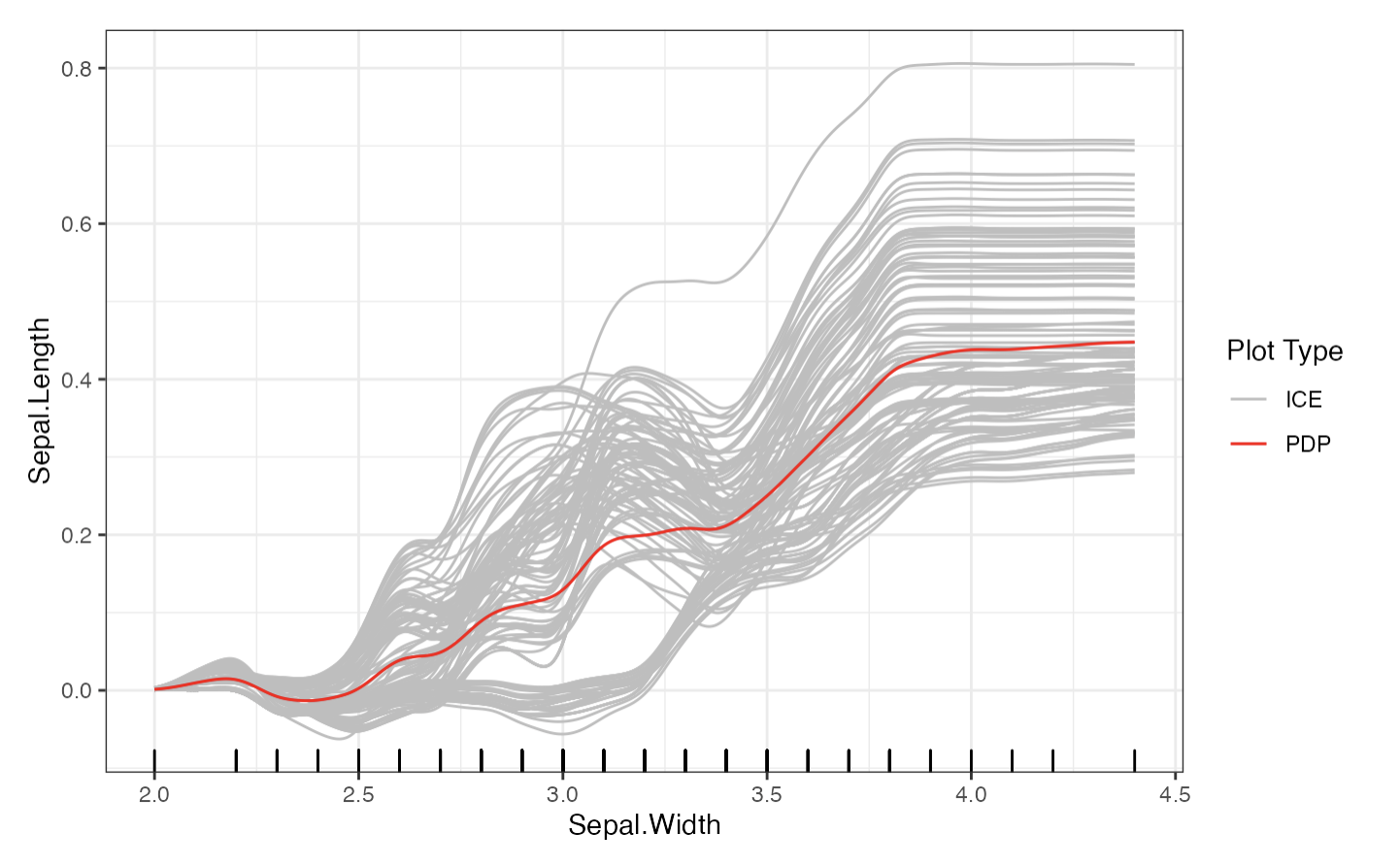

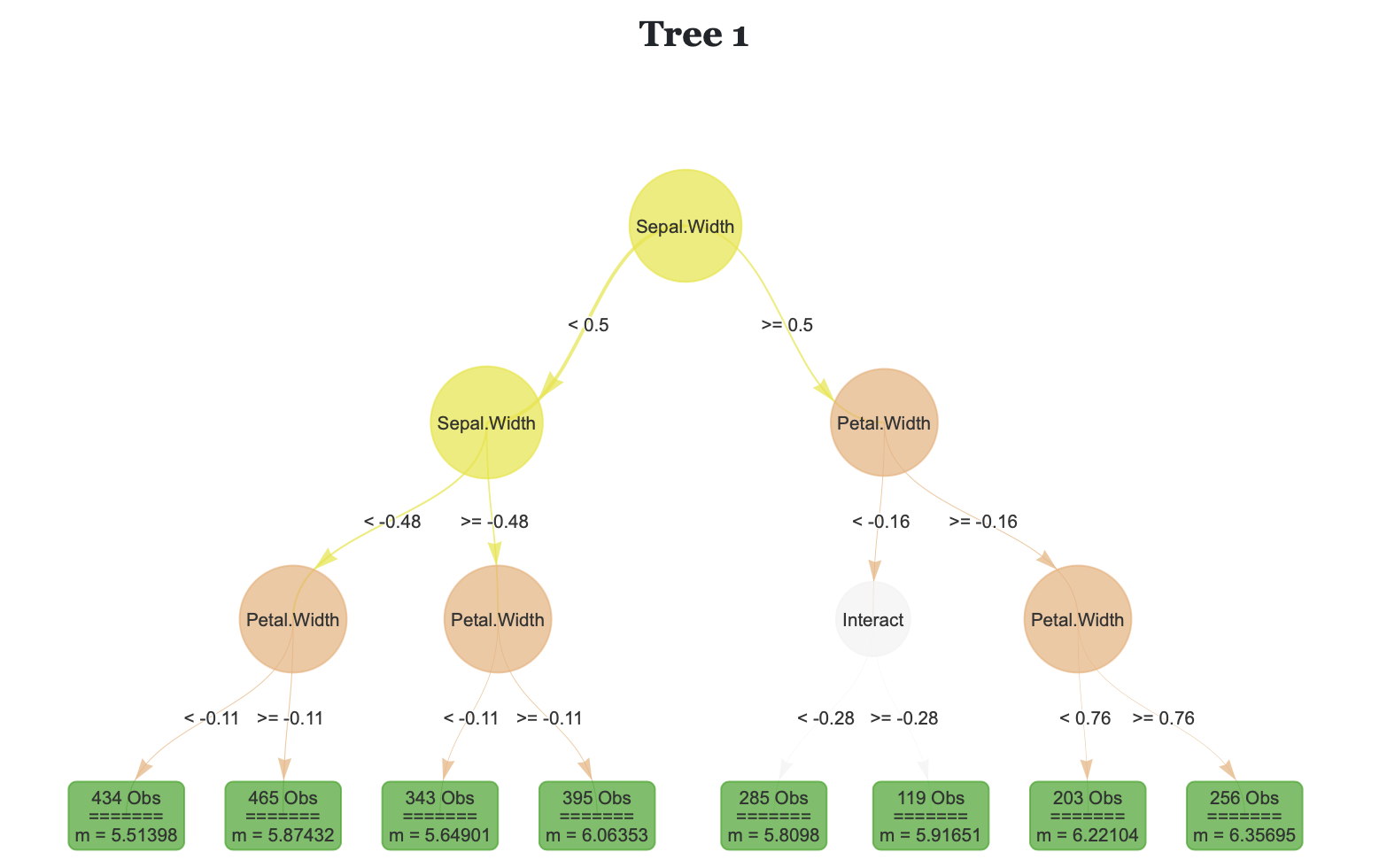

distillML provides several methods for model distillation and interpretability for general black box machine learning models. This package provides implementations of the partial dependence plot (PDP), individual conditional expectation (ICE), and accumulated local effect (ALE) methods, which are model-agnostic interpretability methods (work with any supervised machine learning model). This package also provides a novel method for building a surrogate model that approximates the behavior of its initial algorithm. Below, we provide a simple example that outlines how to use this package. For further details on surrogate distillation, advanced interpretability features, or local surrogate methods, see the articles provided in the documentation. You can install the package from CRAN. For the source code for both packages, see the Github  .

.

.

.